I Built an MCP Server for My Site

After watching the specification build up this spring, I got around to building my own MCP server (for the posts on this site) a couple weeks ago. Since then I’ve been building agents on it and having a grand ol’ time seeing how they explore and what they find, hidden in thousands of entries.

Setting up a server is trivially easy: you can get started in a couple dozen lines. I used the official TypeScript SDK so I could host it on val.town while I’m still experimenting.

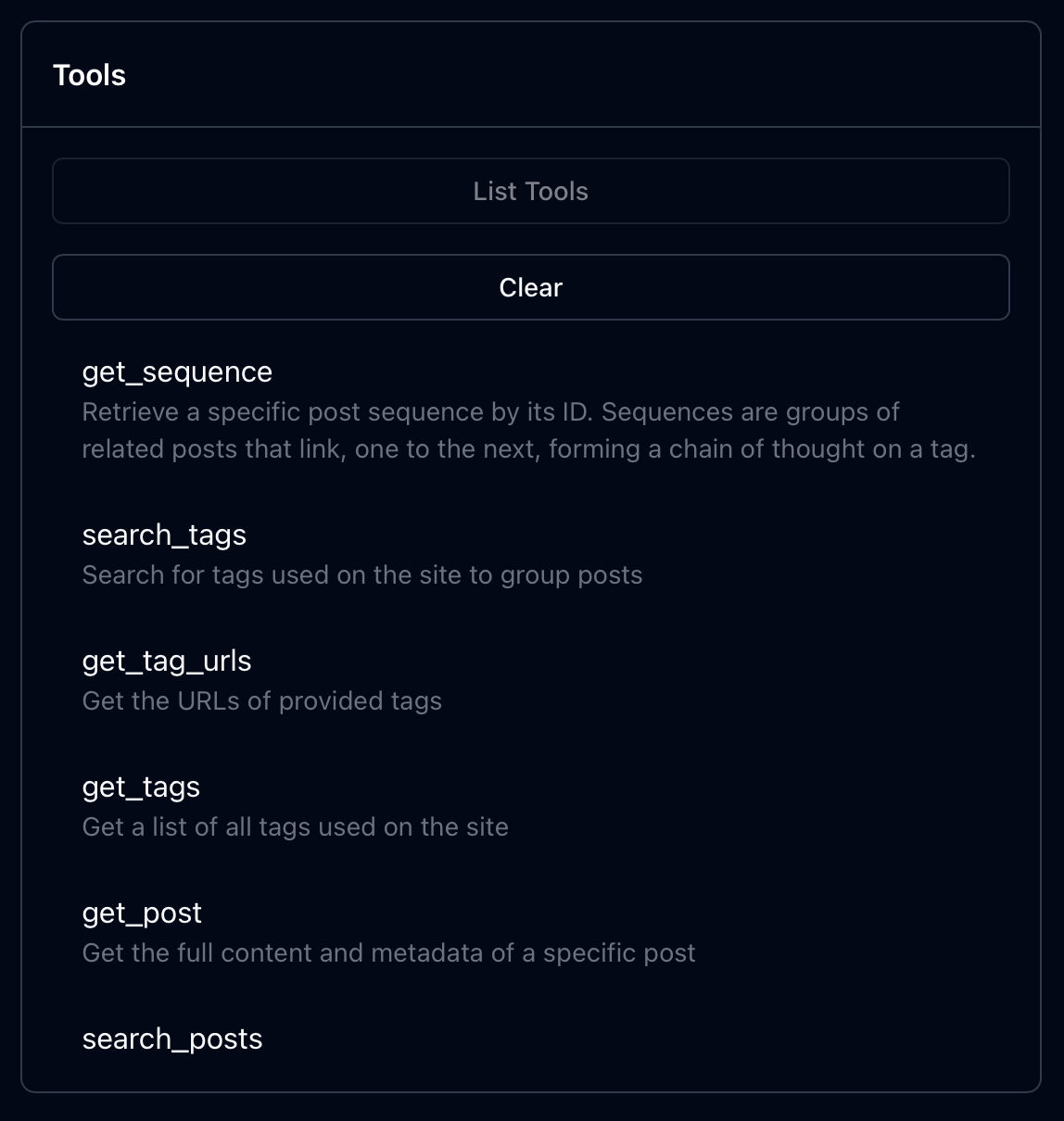

Tools

I recommend using the Model Context Protocol Inspector to interrogate and explore the tools you’re building. As of today, these are the tools I’ve built for this site:

-

get_tagsfor tags -

get_postfor a post -

search_postsfor general search -

get_proverbsfor proverbs -

get_sequencesfor sequences -

get_sequencefor a sequence -

search_tagsfor tags -

get_tag_urlsfor getting URLs for tags

Things I Learned About MCP Tools

Have every tool call return enough tokens to answer, and no more.

You’ll notice that I made a dedicated tool for getting URLs for tags. This is because I found, in experimenting with this MCP server with LLMS, that mostly I and the LLM just need to consider tags on their own (“what tags exist”, “does this tag exist”), not the links to the tags. And since each tag is a single word, if the tag tool returns a list of tags with URLs it’s returning mostly URLs and not the actual tags. This causes a bunch of wasted tokens and compute.

Make tool calls as fast as possible.

In experimenting, I initially downloaded post data and built an in-memory search for every tool call. This was fast for prototyping but made each call take 2-3 seconds. In an agent loop, this caused response times to balloon rapidly. Currently, LLM agents largely have to execute tool calls in sequence and every execution returns a result that must be fed back to the agent for another completion/transformation. So latency in tool calls builds up rapidly and should be avoided. I refactored the MCP server to use a pre-built index and now every tool call is resolved in under a second.

Resources

I haven’t specified any Resources on the server, today. Resources are intended for use by the client application, to display things alongside LLM text/chat. I don’t have a real need for that, yet; I’m building this MCP server for LLM access to my site’s posts, not for displaying them in a chat/browser.

I’ll probably add resources in the future, once they’re supported by clients and more directly used. One interesting idea I’ve seen for resources is to hold pollable endpoints for long-running tool calls: call a tool that will kick off a long-running job, have the tool return a resource ID that the client can poll the server with to get the job result eventually.

Prompts

Virtually no MCP LLM clients I know of are using prompts, so I haven’t built them into my server for now.

Discovery

This stuff is so early, I implemented a discovery mechanism based on someone’s phone snapshot of a presentation slide at a conference. You can find it at /.well-known/mcp.json.

Setup & Usage

You can use the MCP server remotely by calling joshbeckman--1818d72637f311f089f39e149126039e.web.val.run/mcp.

You can use the MCP server locally via mcp-remote:

npx -y mcp-remote https://joshbeckman--1818d72637f311f089f39e149126039e.web.val.run/mcp

Or you can use the streamable-http remote connection. Here’s an example configuration for claude code:

{

"mcpServers": {

"josh-beckman-notes": {

"type": "http",

"url": "https://joshbeckman--1818d72637f311f089f39e149126039e.web.val.run/mcp",

"headers": {}

}

}

}

Please drop a line if you use it! Or if you have any suggestions on how to improve it.

Reference

| ← Previous | Next → |

| Note on The Narrative Fallacy via Ludicity | Fred again... Boiler Room (DJ Set) in London, Jul 29, 2022 |